#3 What's up with protocols

An explainer on why the protocol layer captures most of the value

Yesterday, we looked at different layers of the blockchain stack and understood the economic flywheels at play. We looked at how the major business models of layer 1 and 2 work. Today is going to be a continuation of the idea but we will try to understand why this works the way it does. We briefly glossed over the fact that most of the value is captured in layers 1 and 2 but today’s post is an attempt to understand why that is the case. Let’s jump into the world of protocols!

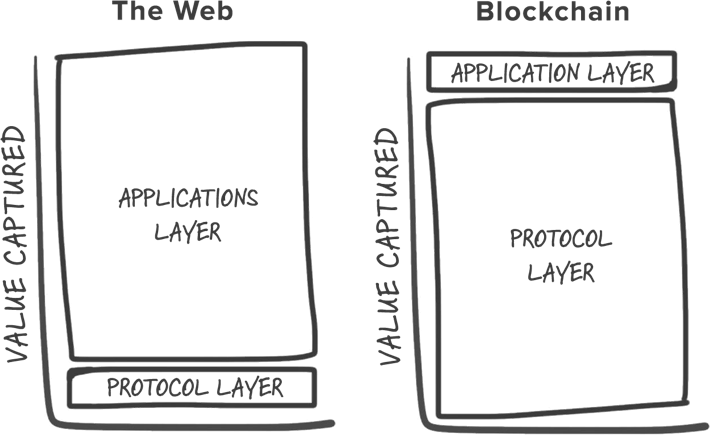

The entire internet was built on open protocols like HTTP that anyone including users, developers or companies could access equally. This level playing field paved way for rapid innovation and creation of powerful companies like Google, Amazon, Facebook etc. These open protocols were stateless and hence required an additional data layer for applications to work on top of. Since we did not know how to maintain the state of the network in a decentralized fashion, the data / application layer drove centralization of the internet and the subsequent formation of these companies. The value capture was mostly from applications centred around data.

Thicc protocols

Joel Monegro wrote a really good piece on this where he argued that blockchains have ‘fat’ protocols where most of the value is captured at the protocol layer. The bitcoin network market cap for example is roughly around 1 trillion USD but the biggest companies built on top of this network are valued at a few hundred million at best.

It is useful to note that there are two important forces that made this possible: a shared data layer and the issue of cryptographic tokens. Firstly, by storing user data (blockchain transactions) across a decentralized network, barriers to entry for new players are reduced and a more vibrant ecosystem is created on top. This alone would not be sufficient to drive widespread adoption. The advent of tokens provides a more powerful incentive for new networks to emerge.

Tokenized networks

The world of tech has seen so much of battle within the same ecosystems, especially in case of complementary products (a product whose appeal increases with the increase in popularity of its complement). Many businesses were built on APIs of social networks for example only to have the rules changed on their usage later on.

Token networks remove this friction by aligning network participants to work together toward a common goal— the growth of the network and the appreciation of the token

-Chris Dixon, Crypto Tokens: A Breakthrough in Open Network Design

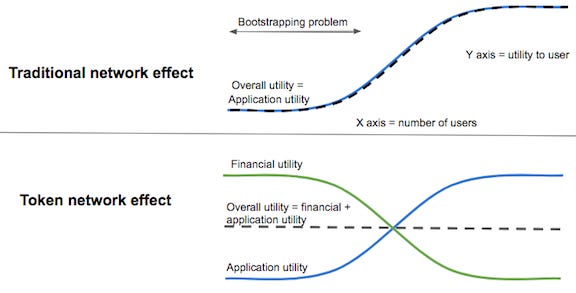

Here’s an illustration of how well designed tokenized networks incentivize users to participate when the network effects are very low. In a traditional web2 setup, if you were building any kind of network, you would have to find ways of reaching a critical mass of network participants mostly through different forms of paid user acquisition / marketing effort. In case of a tokenized network, the participants are issued tokens with speculative value for being early adopters. This provides financial utility way before the actual application’s utility kicks in.

Steemit is the perfect example of this phenomenon. Steemit is a reddit-like network that rewards participants with tokens for upvoting, liking, commenting etc. This kicks off a virtuous cycle as more participants join to receive these tokens, the network grows and the token further appreciates attracting more participants (developers, users, investors) and so on. This also incentivizes developers to build complementary products within the ecosystem. The holders of the Ethereum network’s native token Ether for example, are incentivized to build new protocols within the network as this further appreciates the value of the token.

This is a fundamental shift because so far, there has not been any solid incentive for building protocols. You could only create software that runs on the protocol and sell it / host it. For profit companies can now create protocols and hold a large number of the respective tokens whose value would appreciate as the network grows. They can progressively decentralize after this. This builds the case for a lot of the development effort to go into protocols and explains why this layer is described to be ‘fat’.

Now that we understand the importance of protocols and tokens, how do we design the moving parts of a tokenized network? There is en entire sub-discipline of economics dedicated to this called mechanism design but that’s a story for another day. Until then, let’s catch up at the After Party!