Once during my undergrad, a professor was rushing through a complex topic in his lecture, since we didn’t have many lectures left before the exam. But many students said that they didn’t quite get it.

My professor picked a student and asked him “What is the size of the universe?”

The student: “Infinite”

“How does your size compare to it?”

“Negligible”

He asked another student “How long has the universe been in existence?”

Student: “Since the beginning of time”

“How does your life span compare to it?”

“Negligible”

“When your existence in time and space is negligible, don’t you think you are worrying too much about this topic? Sometimes we don’t understand certain things, and that is okay”

While this was riddled with logical fallacies, the point driven here is pertinent. There are some principles known to us that just seem to be magical with almost no explanation of how it happens. Two of my favourites are the Benford’s Law and our very own Bell curve. My fascination with both these principles is primarily due to a common thread – finding order within chaos.

The Law of Anomalous Numbers

Here’s what Benford’s law states: Pick any real-life data set – newspapers, electricity bills, annual reports, sales data – the likelihood of the first digit of numbers in that data is a fixed distribution. i.e. number 1 appears as the leading significant digit about 30 % of the time, while 9 appears as the leading significant digit less than 5 % of the time and so on.

Not quite believable? Sound like an oversimplification? Well, I though so too. In fact, the (pseudo) statistician in me so vehemently repulsed this idea that I actually downloaded some stock-market data sets and used some MS Excel jugglery to find if this is actually true. The fact that I am writing this piece about the Benford’s law should tell you what the results were.

Benford’s law is used in some countries to catch tax frauds and data manipulations. If the data doesn’t follow the said distribution, they investigate. For the nerds, here is the Wikipedia page stating the applications and the distributions where it doesn’t apply.

The Normal

While Benford’s law is not fathomable at the first glance, there is another one that should be equally surprising. But the surprise here is that it isn’t surprising as we have all accepted it as the truth – the normal curve.

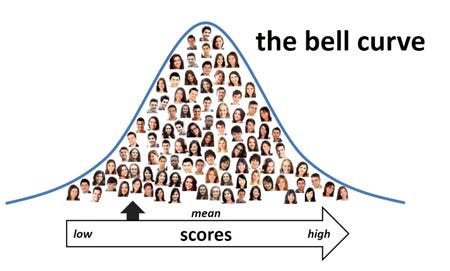

In probability theory, the central limit theorem (CLT) establishes that, in many situations, when independent random variables are summed up, their properly normalized sum tends toward a normal distribution (informally a bell curve).

In simple terms, the central limit theorem explains the common appearance of the "bell curve" applied to real world data. In cases like electronic noise, examination grades, and so on, we can then see that this would often (though not always) produce a final distribution that is approximately normal.

Be it colleges or most workplaces, they all seem to have embraced the bell curve with open arms. While the hatred for the bell curve runs amongst students and employees alike, their questions on “Why can’t everyone in my team be a good performer” haunts many managers too.

Understanding the Normal

Is it cruel to just put in a year’s effort into a 1-5 scale and force fit the scale to get a bell curve?

Can we do away with this entire system of relative grading, like some companies claim to do?

Well, before we come to answer these questions, we need to address some concepts:

Performance vs. Potential: Performance appraisals are used to evaluate your performance in the previous period, and to determine how much performance-based incentive one is supposed to get (if any). This has no bearing on promotion. Potential assessment is to assess if the person is a fit for the next role i.e. they have the requisite skills and behaviours to excel in the next role. Remember a person may be excellent at their present job but may not be a good fit for promotion.

Absolute vs. relative: In a math test, when everyone solves a question correctly, they are given full marks. Simple right? Everyone who achieves their objectives should be given their fair share. But the real-world problems that we are expected to solve doesn’t have standard answers or procedures, neither is the syllabus limited to a certain degree of complexity. There is a degree of relativity that varies for employees and the company alike – market conditions, industry risks etc. Absolute assessments are possible only where there is clear drawn scope because otherwise, it’s all relative.

Feedback from the system: The bell-curve holds true in isolation, but when there are feedback loops back into the system, you need to give it a thought. For example, a system where appraisals are done only by the functional bosses, the employee may perform only in tasks that directly benefit the function, and may in turn end up not performing well in collaboration with other teams.

Changing the normal

With these concepts in mind, we might start understanding why bell curve seems to be the preferred choice, but it certainly needs to be altered. Here are some aspects that can be considered

What do you think about your employees? General Electric famously had the 20-70-10 model, where it believed that 20% where good performers, 70% average and 10% fell in the bottom. Basis your talent acquisition strategy (mass hiring vs. selective), you might want to skew your bell curve a little i.e. decide if you want to have a 20-60-20 model, or a 15-70-15 model.

Frequency of assessment: Don’t wait for the end of the year to measure. It can be highly skewed and filed with biases. Use a more periodic assessment cycle.

Metric of measurement: It is very important to clearly define performance metrics. Vague terms like “decision making” and “developing people” without any valid proxy metric can primarily the reason why people would hate the bell curve.

The jury on the forced bell curve is still out there, discussing manager biases, power equations and politics of force fitting, lack of viable alternatives etc. But till we wait for that, we can learn to make the best out of this chaos.